By Ian Wagdin, Vice President of Technology & Innovation

This year at IBC, one of the biggest conversations will be around Media Exchange—what it is, why it matters, and how it’s shaping the future of software-defined production. As the industry evolves toward more modular, cloud-native workflows, the ability to move media efficiently between applications is becoming mission-critical. At Appear we are actively engaging in several industry initiatives, helping define what Media Exchange means in practice—for us, our partners, and the wider ecosystem. In this blog, I’ll break down the work underway, the technologies involved, and why it’s so important to the future of live media production.

What is Media Exchange?

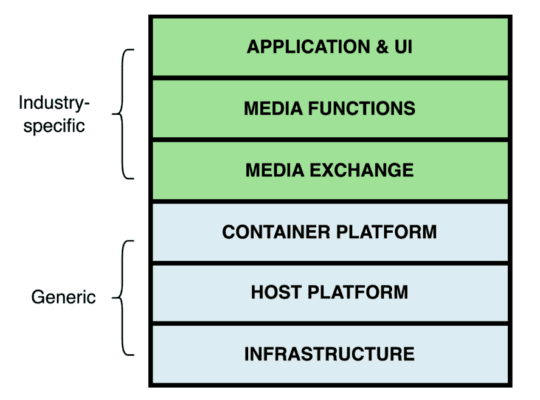

Last year at IBC, the EBU launched their Dynamic Media Facilities (DMF) reference architecture which describes the technology stack required to build a fully functional interoperable software defined production stack. In essence it has divided the technology stack into layers and describes what will come from IT providers and what will be required for media application vendors. The core assumption is that lower down in the stack we will use commodity off-the-shelf components to provide elements such as connectivity and storage. These could be on prem or in a private or public cloud. The key is to make sure they are built in a way that does not require specialist broadcast industry technologies which are rapidly moving from software to hardware implementations. We can use generic technologies to provide transport; a host platform and we can then deploy containerised software that enables end users to deploy the functions required. All the bottom three layers can be generic IT based systems.

The next layer up is a media exchange layer, here we can look at moving media and data around within the ecosystem. Above this we can then deploy media specific functions and applications to control those functions which will be vendor specific.

From this we can see the heart of this system is the ability to move media across generic infrastructure and between applications as required by the deployed workflows. Of course, we could do this by just streaming the video from one application to another but that would require converting the data in and out of a common video streaming format or as uncompressed baseband signals. All of this takes processing power and time, and if there are multiple steps within the workflow it can add significantly to the processing overheads required. If the workflow is distributed over a wide area with multiple compute nodes then protocols such as SRT may also be used adding complexity and latency.

In an IT-centric world, and with multiple media functions all running in micro service containers, this quickly becomes a very complex deployment and what is needed is a better way to move the data between applications. This is where media exchange comes into play.

In the world of high-performance computing this is not a new problem, and the IT world has developed several solutions for this where data is moved within memory shared by several applications. This is relatively easy to do if all the applications are running on the same physical hardware but in a cloud native environment, we need to share memory across several compute notes to optimise performance. To overcome this a technique known as RDMA has been developed. RDMA stands for Remote Direct Memory Access, a high-performance networking technology that allows one computer to directly access the memory of another without involving the CPU or operating system, on either side. Essentially RDMA bypasses the kernel (the heart of the OS, controlling everything from CPU time to memory to hardware devices) and CPU during data transfer and moves data directly from memory to memory between computers over a network.

What has the industry been doing to explore this?

Over the past few years there have been several industry initiatives looking at what this means and how we can leverage it.

The Video Services Forum (VSF) has been looking at software interoperability workflows across wide area networks. The modern live production environment will have both location and software-based services and the VSF have been looking at the whole Ground-to-Cloud and Cloud-to-Ground workflow (GCCG) publishing VSF TR11. This covers a wide range of subjects on deploying SMPTE 2110 on wide area networks to improve the transport of uncompressed media streams over public and private WANs such as:

- Between remote production sites and a broadcast hubFrom a cloud processing environment to on-prem systems

- Across multiple facilities or service providers

As part of this work the problem of how to move data around in a decentralised ecosystem has been explored and areas such as timing data to maintain synchronisation between audio and video feeds and error handling have been identified and APIs published that enable this.

As part of the EBU DMF work a team of vendors and end users was set up after IBC 2024 to explore the challenge of media exchange in more detail. They have formed a Linux Foundation project known as MXL, to develop and open SDK. This work is underway with code being developed by several participants to enable an open dialogue that anyone can participate in. The code is available on Github and rapid progress is being made to deliver the first implementations in late 2025.

At NAB 2025 teams from both projects met to align the approaches and ensure that a single solution is available.

In April 2025 media Exchange also formed the centre of an IBC Accelerator (Live Media Exchange) which is aiming to deploy a practical example of multi-vendor solution that uses media exchange to demonstrate how it will be used in a real-world scenario.

Appear are participants in all three of these projects as well as exploring how we can integrate the principles in our VX software platform.

How does Media Exchange work?

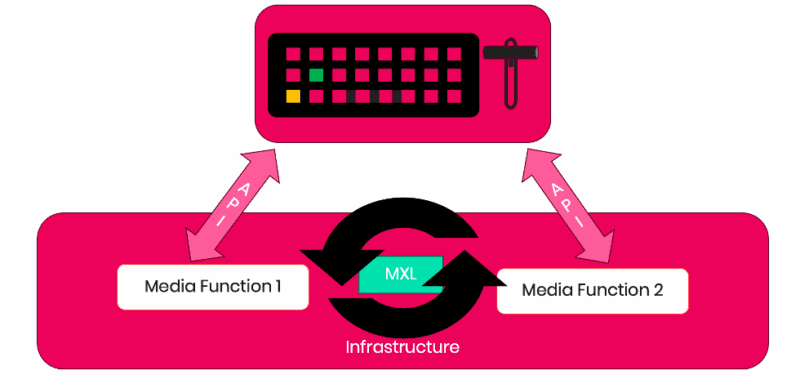

Media Exchange enables the use of shared memory between two or more applications running in a shared domain. The applications run in containers on shared infrastructure, and a controller can make calls to individual media functions. If assets are required to move between the two applications, data can be written into a memory buffer by the first application and read from the memory by the second function.

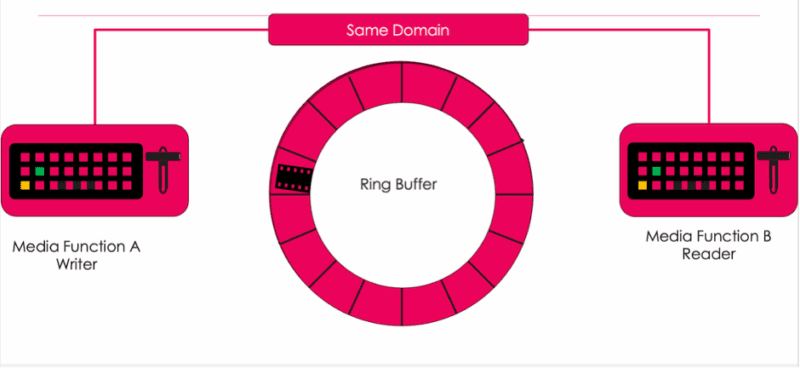

The core workflows build on well known industry architectures. The MXL library provides a mechanism for sharing ring buffers of Flow and Grains (see NMOS IS-04 definitions) between media functions executing on the same host (bare-metal or containerized) and/or between media functions executing on hosts connected using fast fabric technologies (RDMA, EFA, etc).

The MXL library also provides APIs for zero-overhead grain sharing using a reader/writer model in opposition to a sender/receiver model. With a reader/writer model, no packetization or even memory copy is involved, preserving memory bandwidth and CPU cycles. This can enable both synchronous and asynchronous workflows. As compute power begins to exceed the requirements of video processing this means that some functions will be available faster than real time. By accelerating the movement of media essence between applications, latency in an end-to-end workflow can be reduced.

Flows and grains are written and read from memory ring buffers. This enables the media to be transferred between applications without the need for CPU.

In a current media platform, a media function would need to take the data stored in its memory, create a video file from this data, copy this to the OS kernel and hand this off to the network with the receiving application doing the same. With memory sharing the network interface reads and writes directly to shared memory with no CPU overhead or Kernel involvement. This means the CPU and kernel are available for other functions and reduces the latency and increases the throughput of data though the network.

The MXL SDK also uses UNIX files and directories which enables operating system file permissions so we can ensure the security at both domain and flow level.

In an asynchronous workflow timing models are also a critical element. Adding timing data can identify individual grains of media and these are indexed in the ring buffer relative to the PTP epoch. This will become more critical as we move from single host solutions to those hosted on larger environments. In this case it is important that the time source of the hosts are aligned. As all cloud providers provide time sync services, this will be leveraged to ensure synchronisation across wide area platforms. In an ideal world media will be timestamped at its origin time but current reality means that this is usually acquired at the point of ingest into the relevant platform and written into the RTP header extensions.

What is next for Media Exchange?

The first phase of the work is limited to certain uncompressed video and audio formats. This is to develop solutions that support most use cases. Aspects such as variable framerate video or complex compression are not supported. Over time we expect more formats to be supported.

Audio and timing workflows are also under investigation with work required to identify the optimum parameters that support efficient workflows. There is a balance between time stamping every audio sample as a grain and finding the right number of samples to a grain to achieve synchronous audio and video workflows.

Current models are also limited to running on a single host environment, but technologies exist that also data exchange in memory over wide areas and these will follow as the technology is better understood. The current MXL work also only describes the way media is shared and does not explore elements such as orchestration or how it will be deployed in an application. This is intentional and leaves spaces for manufacturers to build their own and adding value where appropriate.

Conclusions

Media Exchange is rapidly emerging as a critical enabler for low-latency, high-efficiency, and even faster-than-real-time media workflows. By allowing applications to share data directly across software platforms, it removes unnecessary overhead and paves the way for truly agile, software-defined production environments. With significant development already underway, IBC 2025 marks an inflection point—positioning Media Exchange as a foundational element in the future of broadcast and live production.

Crucially, success depends on industry alignment. While multiple groups have explored the technologies independently, efforts are now converging around a unified, open solution. The Linux Foundation’s MXL project—backed by major vendors and industry bodies such as the EBU and NABA—offers a collaborative framework for progress. It’s open to all, and we encourage anyone with a stake in next-generation media workflows to get involved and help shape what comes next.